At Laminar, we're excited to announce our newest feature: Online Evaluations. This feature allows engineering teams to run custom evaluators, either LLM-based or Python-based, on their LLM calls as they happen in production.

What are Online Evaluations?

Online evaluations run automated checks and produce labels on your LLM calls as they happen in production. Instead of collecting data for post-hoc analysis, Laminar automatically evaluates each model call in real-time by analyzing the inputs and outputs of your LLM spans.

Why We Built It

When you have thousands of LLM calls happening every day, it's hard to know if your LLMs are behaving as expected. Online evaluations allow you to monitor the quality of your LLMs in real-time, collect performance statistics, and detect issues before they impact users.

How It Works

Laminar's online evaluations system is built around three core concepts:

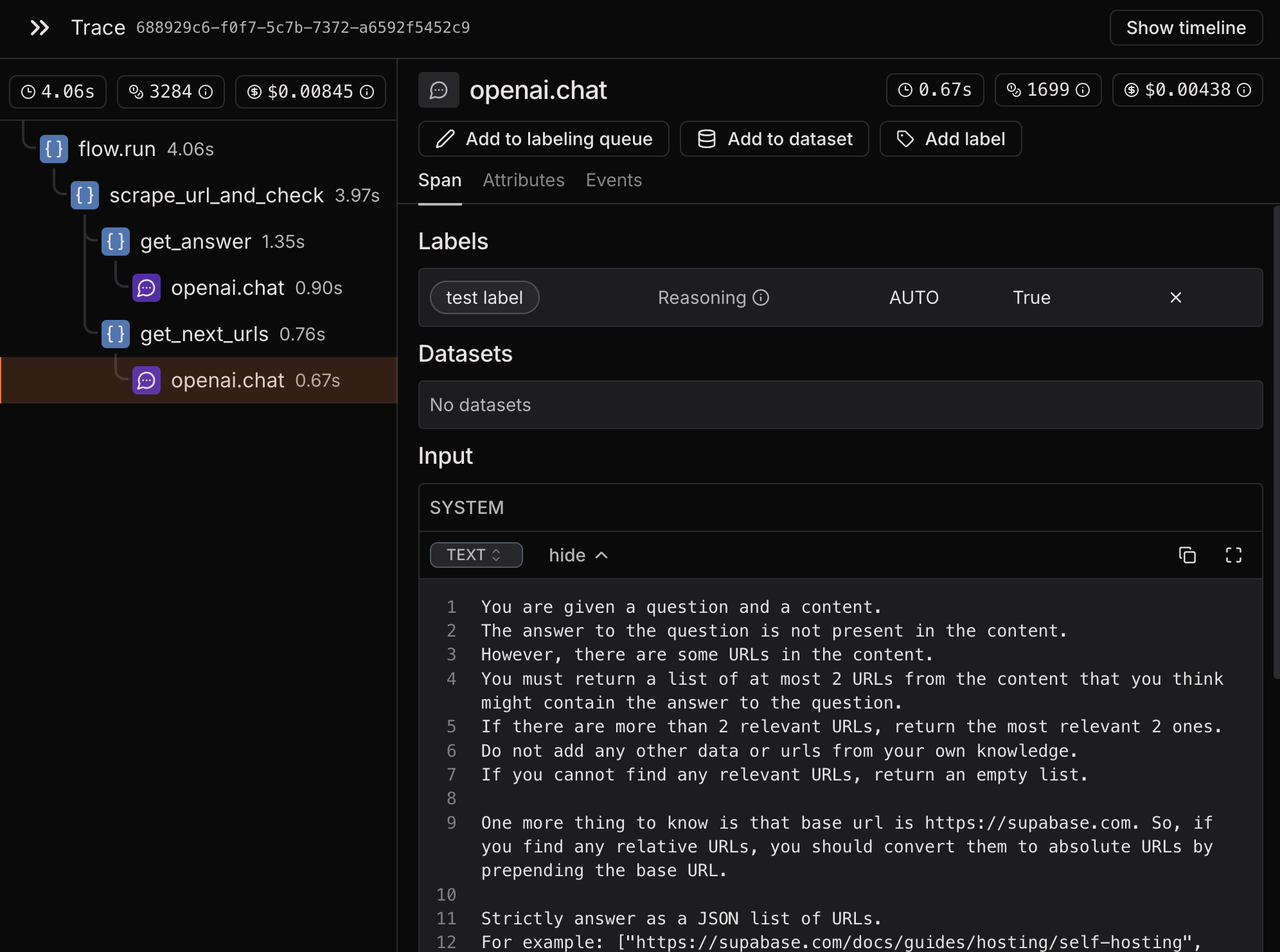

1. Span Paths

Span paths uniquely identify where LLM calls happen in your code. They're automatically constructed from the location of the call, making it easy to track specific functions and endpoints.

2. Span Labels

Labels are values attached to spans that indicate evaluation results.

3. Evaluators

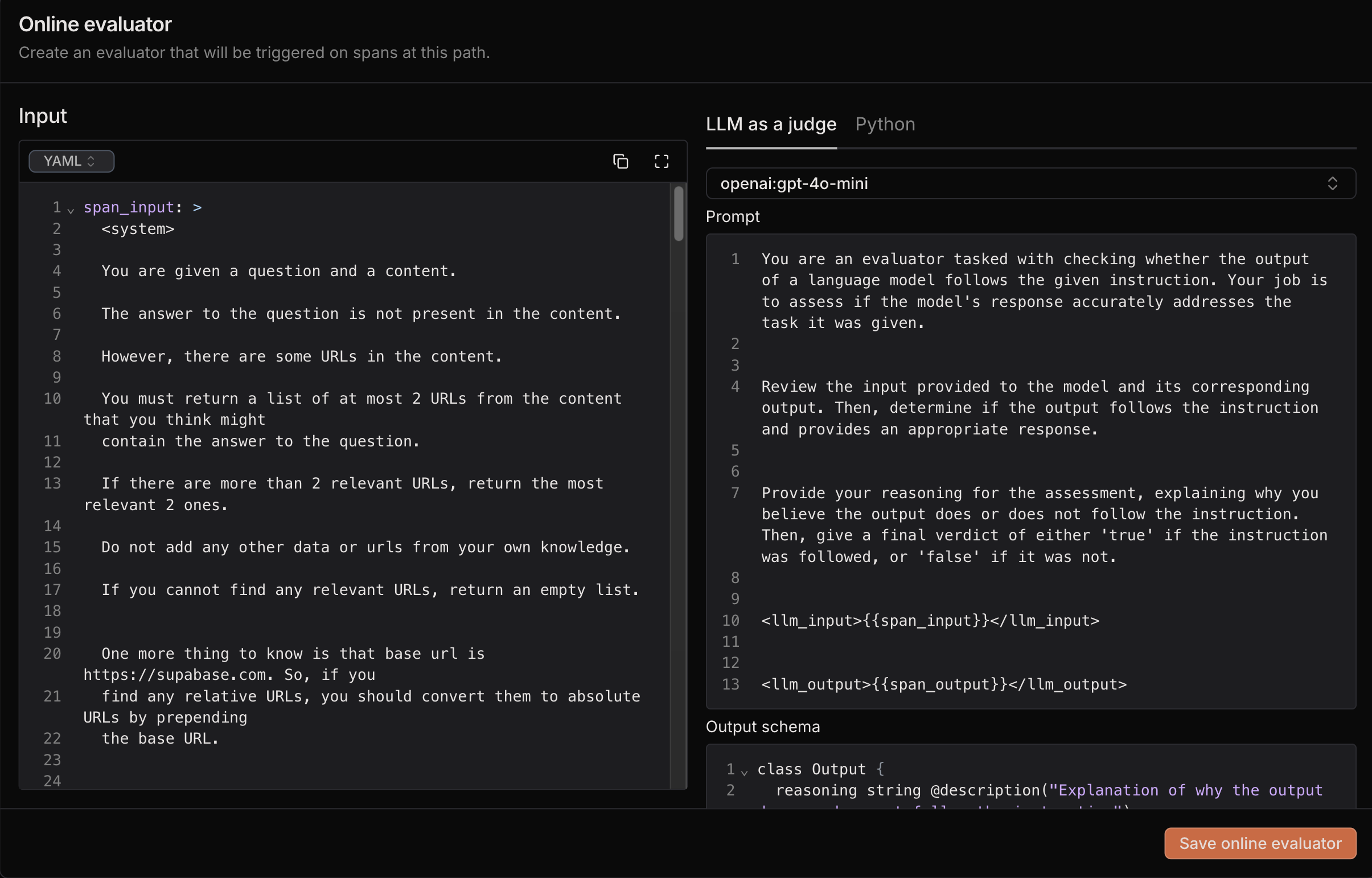

Evaluators analyze inputs and outputs to generate labels. Laminar supports two types of evaluators:

- LLM-based evaluators

- Python-based evaluators

Setting Up Evaluations

Getting started with Laminar's online evaluations is straightforward:

-

Navigate to "Traces" in your Laminar dashboard

-

Select the span you want to evaluate

-

Click "Add Label" and create or choose a label class

-

Configure your evaluator:

- Choose between Python code or LLM-based evaluation

- Test your evaluator directly in the UI

-

Save and enable for production

Once enabled, Laminar will automatically run your evaluator on your LLM calls and attach labels to the spans. This label will be marked as AUTO in the dashboard.

Best Practices

Start Simple

- Begin with basic format and content checks

- Add more sophisticated evaluations gradually

- Monitor evaluator performance impact

Layer Your Checks

- Technical validation (format, structure)

- Content validation (completeness, relevance)

- Quality metrics (coherence, accuracy)

Monitor Results

- Track evaluation trends over time

- Regularly review and refine criteria

Conclusion

Online evaluations represent a significant step forward in LLM operations, bringing immediate quality feedback to production systems. With Laminar's implementation, teams can maintain high standards while gathering valuable insights about their models' behavior. Try out online evaluations today and let us know what you think! Check out our documentation for detailed setup instructions and best practices.